Career

Interviews, Internship Reviews, and Personal Growth

I interned as a software engineer at Lablup, a Korean startup, for seven weeks. After my Goldman Sachs internship ended, I had a lot of free time before the fall semester began. I started working with the mindset of "I might as well do something productive" and it turned out to be a valuable experience.

Internship Application Process

Lablup was a mentor company for Contributhon, operated by OSS. Contributhon is a competition where participants are matched with companies to contribute to their open-source projects for six weeks. I briefly considered participating in this competition but wanted to work full-time, so I contacted Lablup's founder/CEO, interviewed, and started working.

About Lablup

Lablup is one of Korea's leading tech startups, developing Backend.AI, a resource management platform for AI research, as an open-source project.

While Google Colab is convenient and useful for simple tasks, it has limitations. For example, its limitations become apparent when using programs that only run on specific OSes or when dealing with large datasets. Therefore, institutions conducting serious AI research typically don't rely on Google Colab. When I was at Naver, we used an internal platform called NSML, and at Onclusive, we ran AWS instances to train models to ensure sufficient GPU usage. Although it was a straightforward method, it was quite cumbersome as I had to download necessary programs each time, transfer local files via scp, and then stop the instance after use.

Lablup earns revenue by providing B2B technical support for Backend.AI to various institutions. Around the time I started my internship, they launched a service targeting general consumers, similar to Google Colab. It's more versatile and useful than Google Colab but is a paid service.

Internship Experience

Instead of working on one large project for seven weeks, I resolved various issues. Backend.AI is very complex, so it took several days just to set it up and get it running on my personal computer, and about a month to understand the system's workflow. To run everything locally, including the UI, I had to have six programs running. Working with six terminals open simultaneously was actually pretty cool, though. Anyway, the work I did as an intern is as follows:

Even Allocation of GPU

Some research assumes that GPUs are evenly distributed when using multiple GPUs for model training. Therefore, we decided to develop an algorithm instead of a simple greedy method. For example, if a 0.3 GPU workload needed to be distributed across two 0.2 GPU capable slots, we programmed it to choose allocations of 0.15/0.15 rather than 0.2/0.1.

I spent most of my time developing the algorithm. It felt like solving LeetCode challenges, but I had to consider far more scenarios than typical coding problems, and there were more edge cases than expected, making it difficult to write clean code. I wrote the code to enable this algorithm to function within the system.

etcd3 Library Replacement

Backend.AI uses asyncio extensively. python-etcd3 is an open-source library that enables the use of etcd with asyncio, but it hadn't been maintained for a long time, and a grpc-related error that occurred when the Backend.AI agent terminated had been unfixed for quite some time. So, I found a library called aetcd3, which was a fork of etcd3aio (itself a fork of python-etcd3), made a few modifications, replaced python-etcd3 with it, and made Backend.AI run without errors in the local environment. However, replacing an external library in a complex system like Backend.AI requires great caution, so ultimately, my work was not merged during my internship. Nevertheless, by experimenting with various open-source libraries and reading issues posted by people, I gained some insight into the open-source community.

Github Link - Issue

XFS Storage Proxy

XFS is a file system created by Silicon Graphics. I developed the XFS storage proxy for Backend.AI. Backend.AI uses a virtual folder system. Users can create their own personal virtual folders in the cloud and store files, libraries, or code. Of course, features like folder sharing, duplication, and access control are implemented. Virtual folders are actually stored in a specific structure on a file system designated by the program, and XFS is one of the file systems supported by Backend.AI.

Basic virtual folder functionality was already implemented, so I adapted it for XFS. In XFS, the file system is managed on a per-project basis, with project information stored in /etc/projid and /etc/projects. So, when new virtual folders were created or deleted, I updated these two files accordingly. Each project has a storage quota, and if the file capacity was exceeded, I manually increased the quota.

Since this was my first experience with a file system like XFS, it took quite some time to understand its working principles and usage. As macOS does not support XFS, I created an Ubuntu instance on AWS to work. Since I had to work exclusively in the terminal, I learned how to use tmux and vim, which turned out to be surprisingly fun.

Github Link - PR

Leave Virtual Folder

I implemented a feature allowing users to leave shared virtual folders. When a user leaves a virtual folder shared by multiple people, I applied the changes to the managing database and revoked the user's access rights to that folder. I also enabled this functionality through the Python client. This involved standard backend work, implementing API endpoints and related functions.

Github Link - Issue, PR (manager), PR (client-py)

Clone Virtual Folder

The ability to clone a shared or group virtual folder to a personal virtual folder is useful when users want to easily start new projects using existing ones as templates or experiment with the contents of shared virtual folders. Similar to the 'Leave Virtual Folder' task, I implemented the related functionalities in the Backend.AI agent, manager, and Python client.

Github Link - Issue, PR (manager), PR (client-py)

Update Installation Script

I updated the installation script that installs all necessary programs to run Backend.AI and configures the environment appropriately. The goal was to make it so that running the script would set up Backend.AI to run immediately without further manual intervention. This included tasks like configuring etcd and PostgreSQL in Docker.

Github Link - Issue1, Issue2, PR

Global Dotfiles

Backend.AI allows users to modify configuration files like ~/.bashrc or ~/.zshrc in their respective containers. Since administrators also need to be able to modify global configuration files for group settings, I implemented a feature to modify configuration files like /etc/bash.bashrc or /etc/profile at the domain or group level.

I enabled storing dotfiles in the PostgreSQL table that manages groups and domains, and added functionality to the Backend.AI manager, agent, and Python client to create, modify, and delete these dotfiles. I also modified the code to copy these dotfiles to the desired location when a kernel (compute session) starts.

Github Link - Issue, PR (manager), PR (agent), PR (client-py)

Github Link - Issue, PR (manager), PR (agent), PR (client-py)

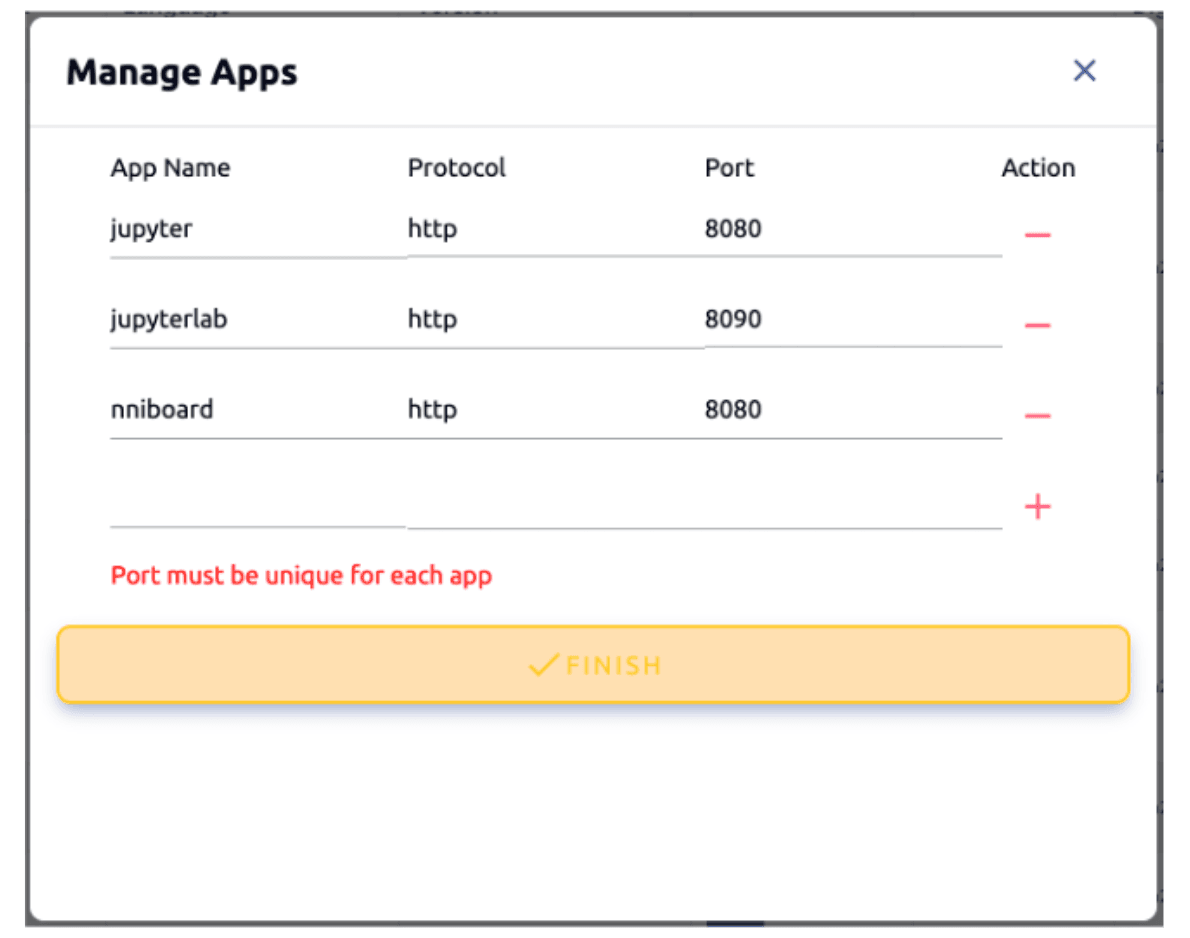

Service Port Validation

Apps like Jupyter Notebook run inside the Backend.AI container using specific ports, and users must enter the service and corresponding port number in advance. The port numbers users enter must satisfy several conditions. For example, the same port number cannot be duplicated, it must be a number between 1 and 65535, and users should not input port numbers used internally by the system. I wrote code to validate the port number entered by the user before the kernel (compute session) starts and raise an error if there's an issue. I also updated the UI to display these errors.

Github Link - PR (manager), PR (agent), PR (console), PR (common)

Internship Review

The Lablup internship was challenging but enjoyable. Most of the technologies used to run Backend.AI were new to me, so the process of learning them while working was fascinating. The thrill I felt when the code I implemented worked correctly after hours of reading the existing codebase and various online documents was immense. It was meaningful to be able to work on various components of Backend.AI (manager, agent, console, Python client, installation scripts, storage proxy), and I especially learned a lot by getting to see the big picture of how the Backend.AI system operates.

Due to COVID-19, I worked remotely for most of the internship, but I think the company atmosphere was still good. The Lablup founders are exceptionally talented and thoroughly answered any questions I had. I also can't forget the dedication of some team members who would push code at 2:30 AM and then show up for the morning meeting. Working at a tech-focused startup demands more specialized skills and effort than other types of startups, but the sense of accomplishment seems to be correspondingly high. Observing the Lablup team members work so diligently over the years has also raised my own standards for hard work.